I am Wenxuan Song (宋文轩 in Chinese), a first-year Ph.D. student at the ROAS Thrust at the Hong Kong University of Science and Technology (HKUST), Guangzhou campus, advised by Haoang Li. I am the first author of an AAAI 2026 Best Paper Award (5 of 30948 submissions). I have published 8 papers as a first or co–first author in leading conferences and journals, including ICLR, ICRA, AAAI, IROS, and the IEEE Transactions series.

Previously, I received my Bachelor’s degree in robotics at Harbin Institute of Technology (HIT), Weihai Campus, advised by Minghang Zhao and Bo Huang. I was a visiting student at MiLab, Westlake University, supervised by Donglin Wang. I keep a close connection with Pengxiang Ding and Han Zhao from then on, who have been teaching me a lot. I also spent time at Monash University, supervised by Zongyuan Ge and Xuelian Cheng.

My research focuses on embodied intelligence. My work ReconVLA: Reconstructive Vision–Language–Action Model as Effective Robot Perceiver, received the AAAI 2026 Best Paper Award and has attracted over 180 stars on GitHub. I am also a co-founder of the open-source organization OpenHelix Team, where the repositories I have substantially contributed to have collectively garnered more than 3600 stars.

My work has been widely used and referred to in industrial models, such as Robbyant and Xiaomi. Moreover, my work has been widely featured in news media, such as MIT Technology Review.

Goal: Pushing the boundaries of the world through robotics.

Focus: Building dexterous and generalizable robotic systems through Vision-Language-Action models and world models.

Email: songwenxuan0115 [AT] gmail.com

🔥 Projects

-

Embodied-AI-Paper-TopConf

: We collect published papers in the field of embodied intelligence.

-

LLaVA-VLA

: We propose a simple yet effective Vision-Language-Action model built upon the popular open-source VLM LLaVA.

-

OpenHelix

: We propose an open-source dual-system VLA model for robotic manipulation.

📝 Selected Publications

- For full publications, please refer to my Google Scholar.

Spatial Forcing: Implicit Spatial Representation Alignment for Vision–Language–Action Models

Fuhao Li*, Wenxuan Song*, Han Zhao, Jingbo Wang, Pengxiang Ding, Donglin Wang, Long Zeng, Haoang Li

* denotes equal contribution, ‡ denotes project lead

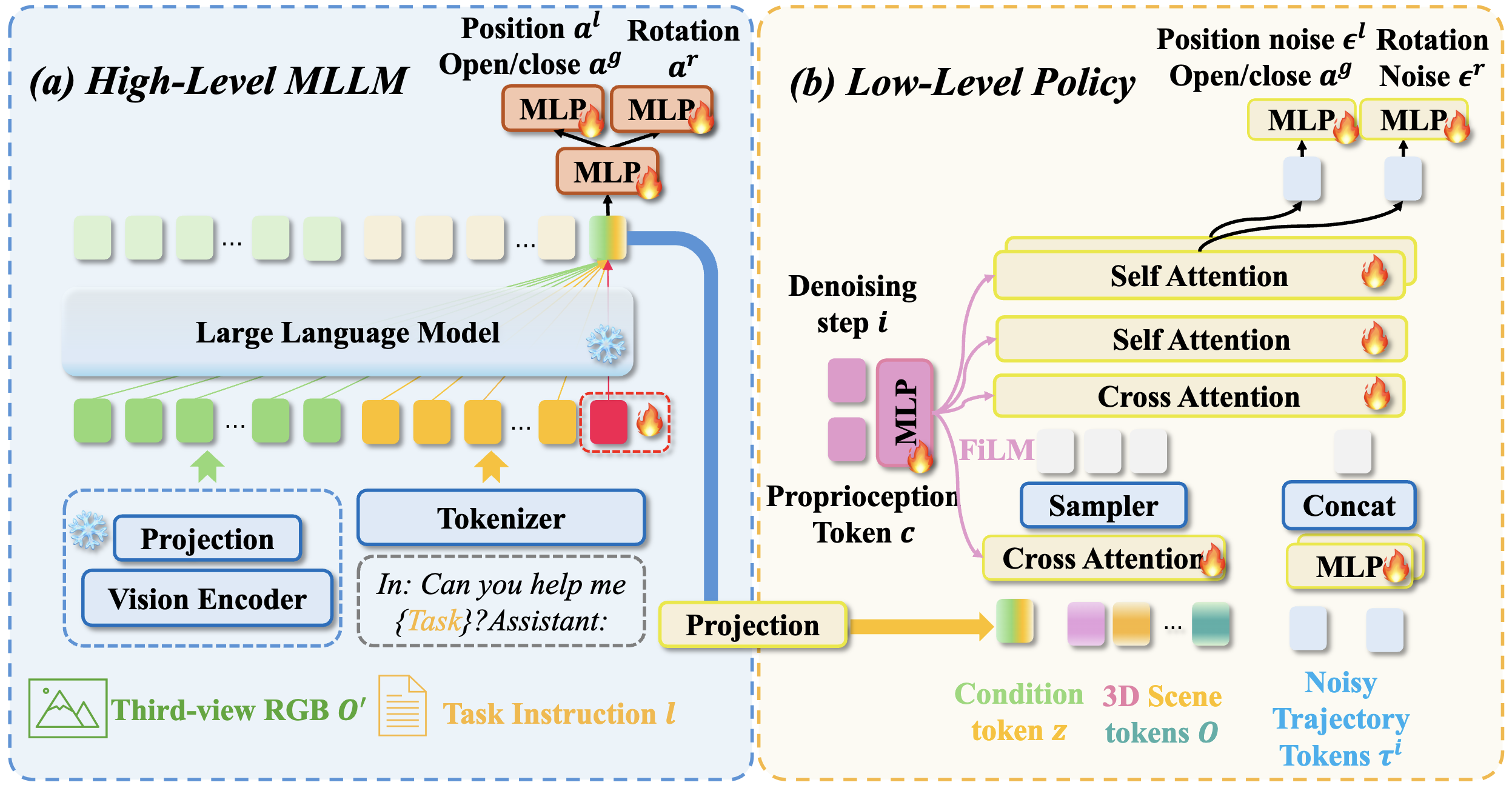

Unified Diffusion VLA: Vision–Language–Action Model via Joint Discrete Denoising Diffusion Process

Jiayi Chen, Wenxuan Song, Pengxiang Ding, Ziyang Zhou, Han Zhao, Feilong Tang, Donglin Wang, Haoang Li

* denotes equal contribution, ‡ denotes project lead

Wenxuan Song, Ziyang Zhou, Han Zhao, Jiayi Chen, Pengxiang Ding, Haodong Yan, Yuxin Huang, Feilong Tang, Donglin Wang, Haoang Li

VLA-Adapter: An Effective Paradigm for Tiny-Scale Vision–Language–Action Models

Yihao Wang, Pengxiang Ding, Lingxiao Li, Can Cui, Zirui Ge, Xinyang Tong, Wenxuan Song, Han Zhao, Wei Zhao, Pengxu Hou, Siteng Huang, Yifan Tang, Wenhui Wang, Ru Zhang, Jianyi Liu, Donglin Wang

Wenxuan Song, Jiayi Chen, Xiaoquan Sun, Huashuo Lei, Yikai Qin, Wei Zhao, Pengxiang Ding, Han Zhao, Tongxin Wang, Pengxu Hou, Zhide Zhong, Haodong Yan, Donglin Wang, Jun Ma, Haoang Li

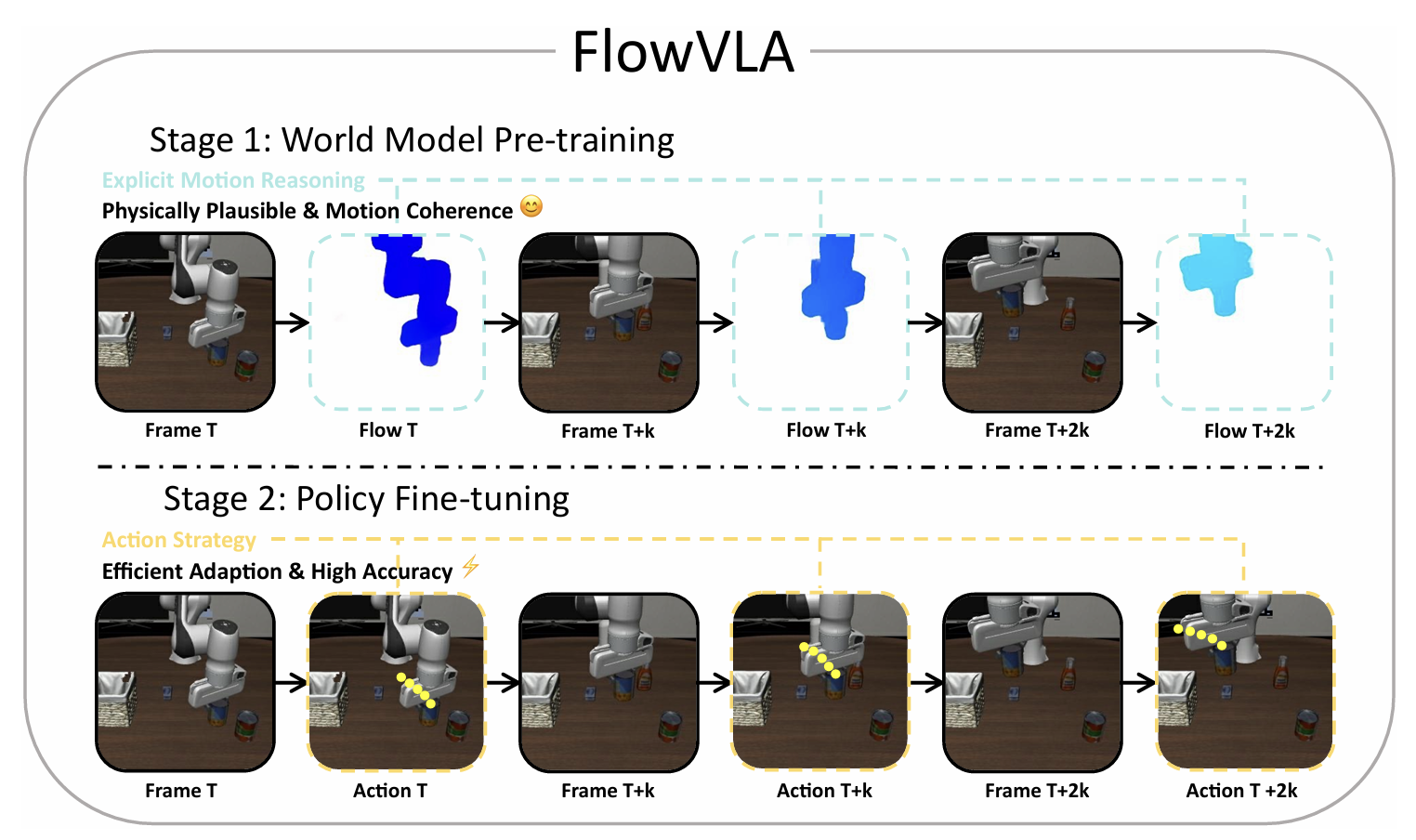

FlowVLA: Thinking in Motion with a Visual Chain of Thought

Zhide Zhong, Haodong Yan, Junfeng Li, Xiangchen Liu, Xin Gong, Wenxuan Song, Jiayi Chen, Haoang Li

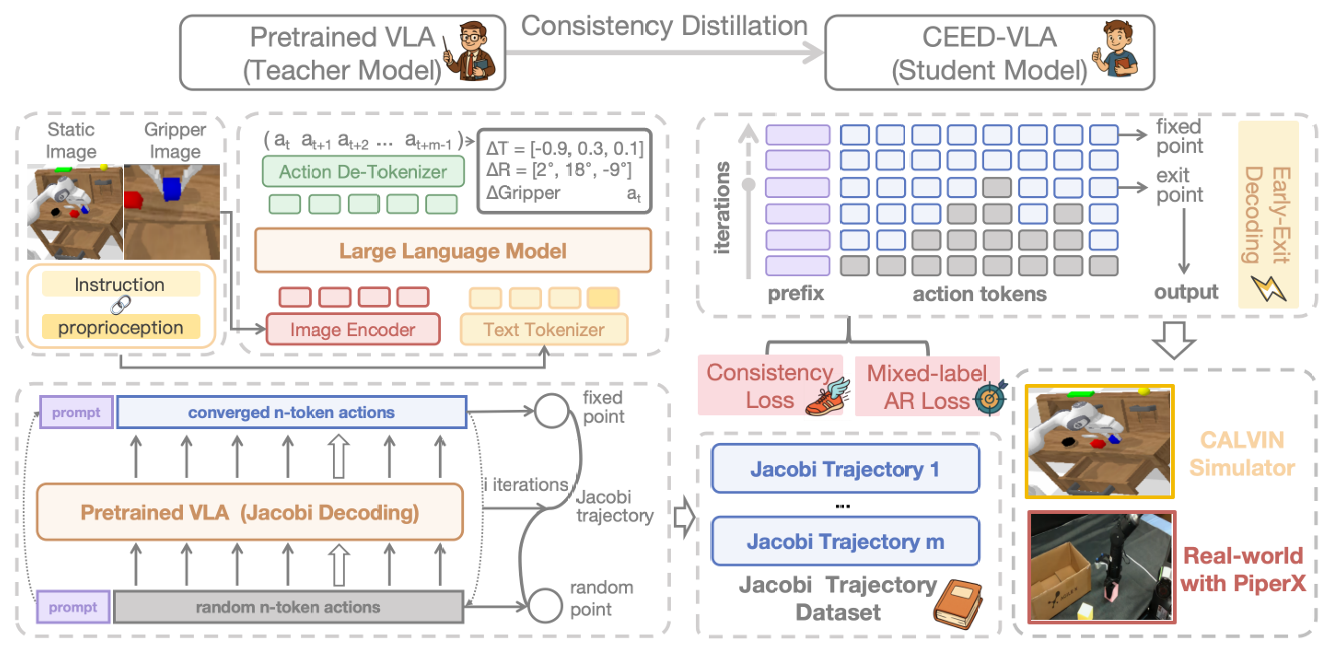

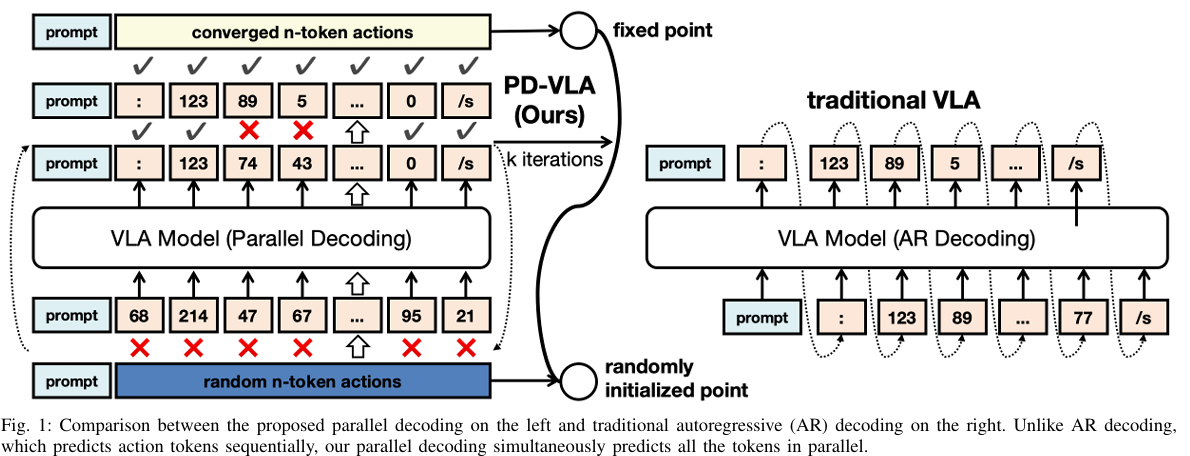

CEED-VLA: Consistency Vision-Language-Action Model with Early-Exit Decoding

Wenxuan Song, Jiayi Chen, Pengxiang Ding, Yuxin Huang, Han Zhao, Donglin Wang, Haoang Li

Wenxuan Song, Jiayi Chen, Pengxiang Ding, Han Zhao, Wei Zhao,Zhide Zhong, Zongyuan Ge, Jun Ma, Haoang Li

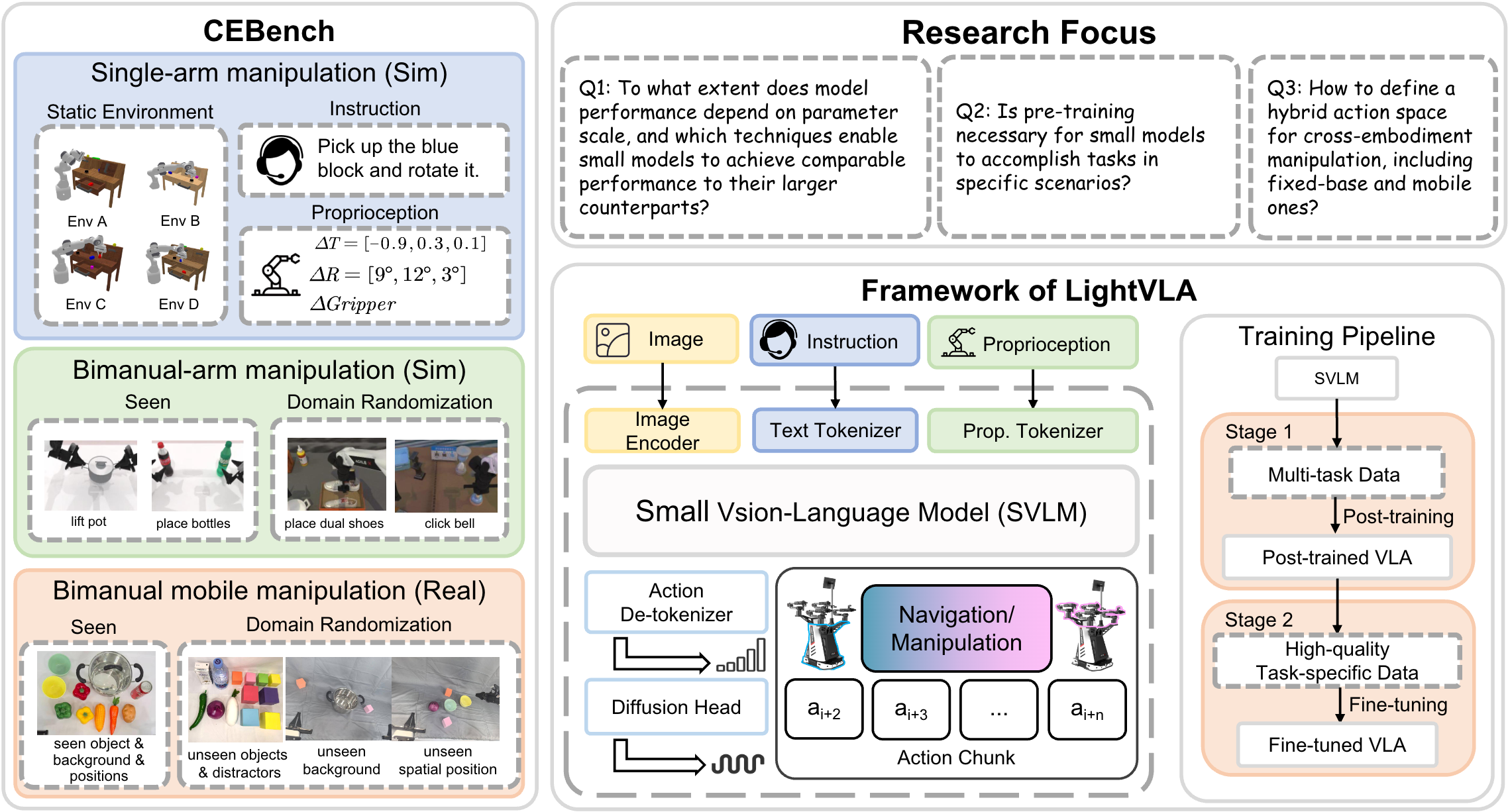

Can Cui, Pengxiang Ding, Wenxuan Song, Shuanghao Bai, Xinyang Tong, Zirui Ge, Runze Suo,

Wanqi Zhou, Yang Liu, Bofang Jia, Han Zhao, Siteng Huang, Donglin Wang

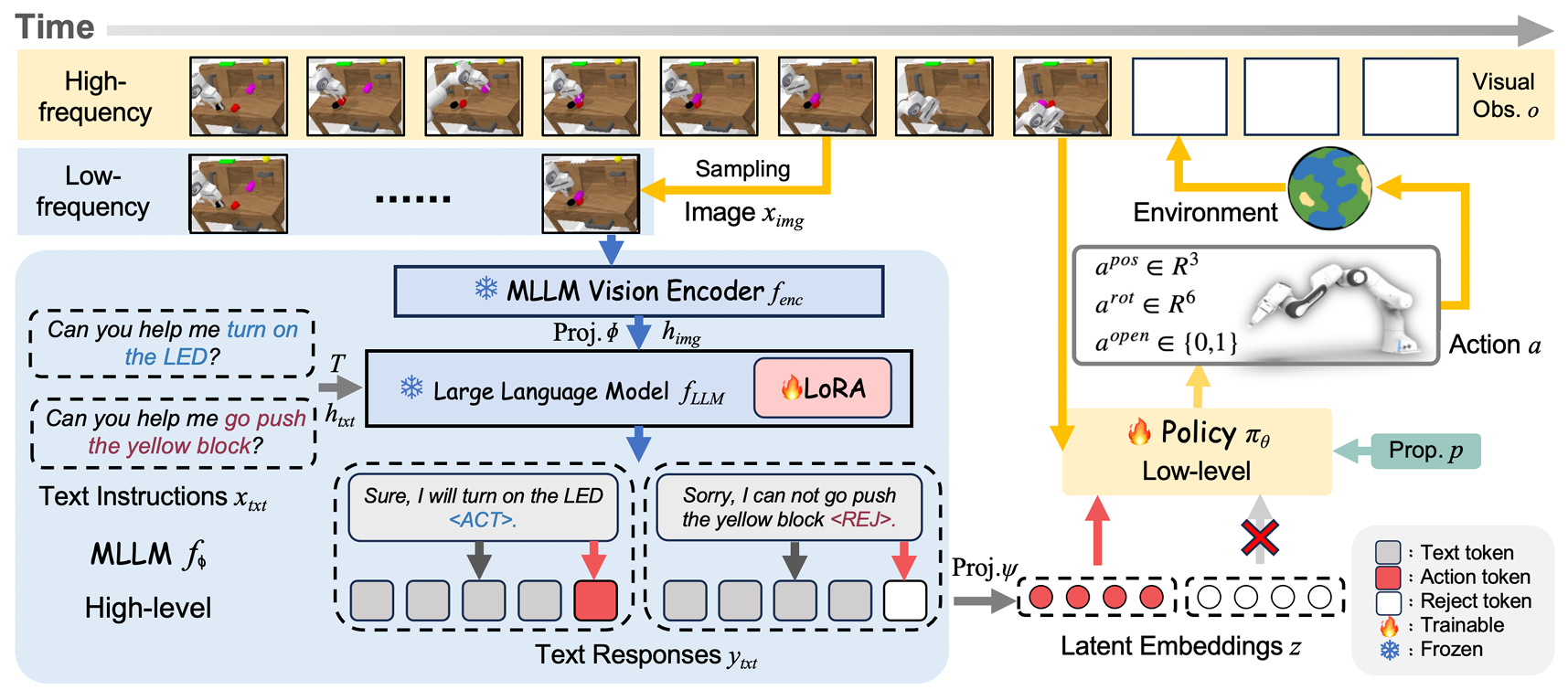

RationalVLA: A Rational Vision-Language-Action Model with Dual System

Wenxuan Song, Jiayi Chen, Wenxue Li, Xu He, Han Zhao, Can Cui, Pengxiang Ding, Shiyan Su, Feilong Tang, Donglin Wang, Xuelian Cheng, Zongyuan Ge, Xinhu Zheng, Zhe Liu, Hesheng Wang, Haoang Li

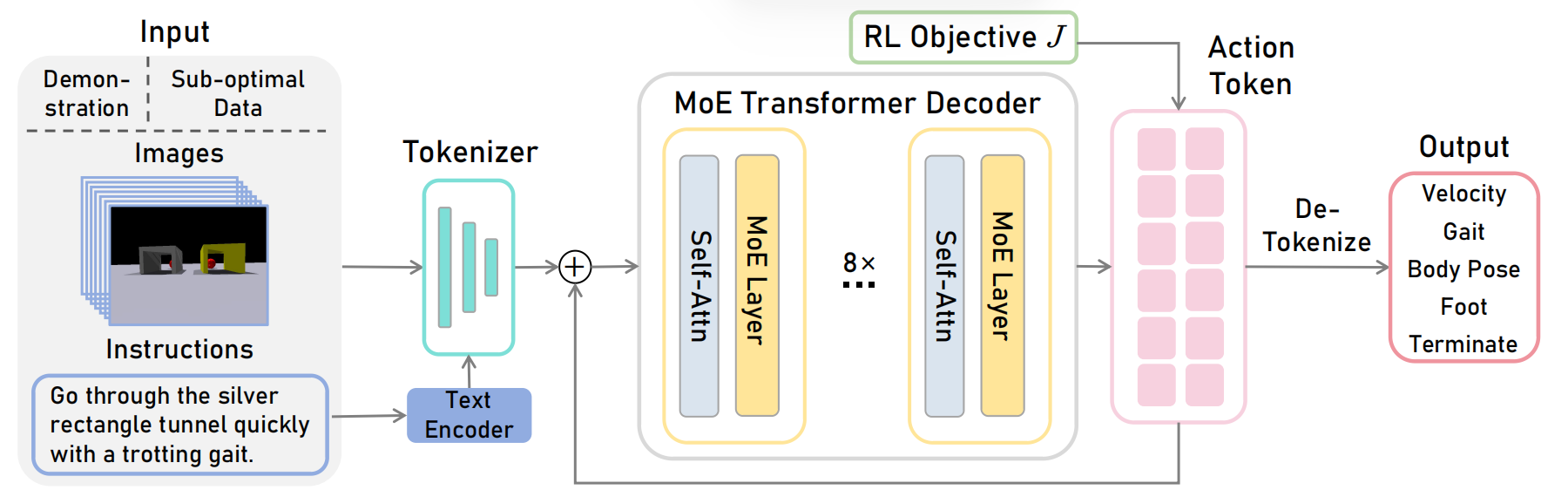

MoRE: Unlocking Scalability in Reinforcement Learning for Quadruped Vision-Language-Action Models

Han Zhao, Wenxuan Song, Donglin Wang, Xinyang Tong, Pengxiang Ding, Xuelian Cheng, Zongyuan Ge

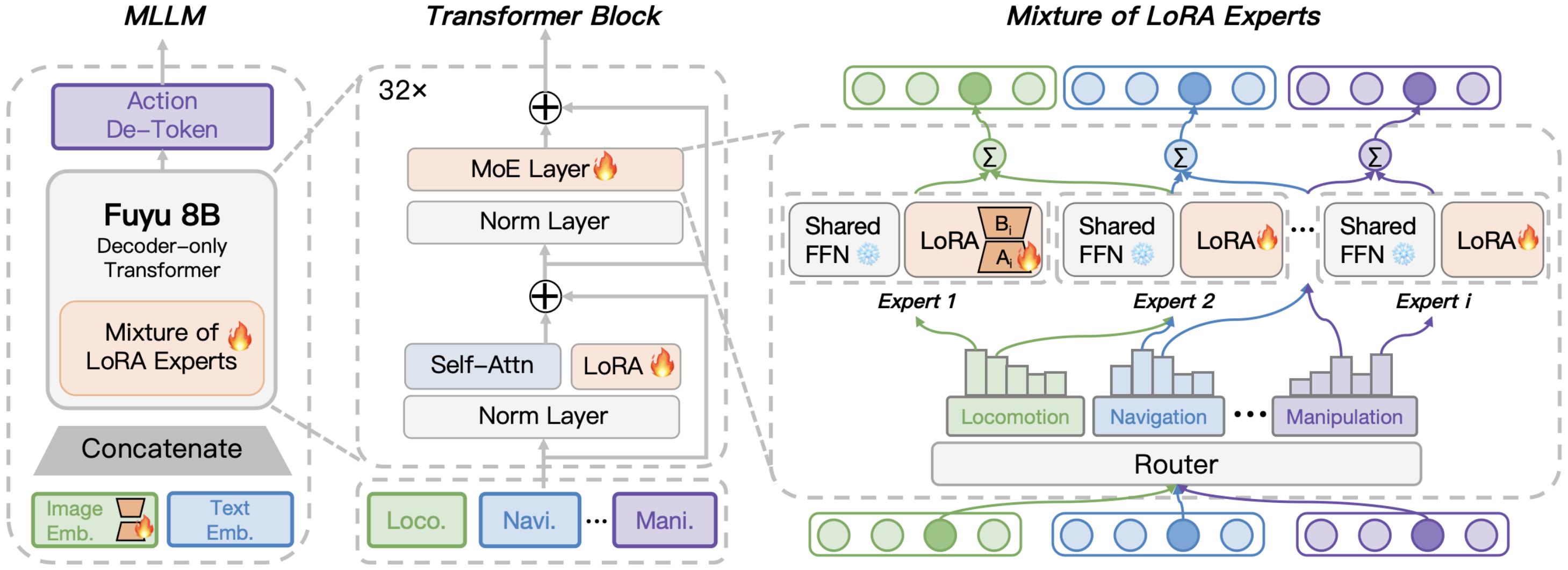

GeRM: A Generalist Robotic Model with Mixture-of-experts for Quadruped Robots

Wenxuan Song, Han Zhao, Pengxiang Ding, Can Cui, Shangke Lyu, Yaning Fan, Donglin Wang

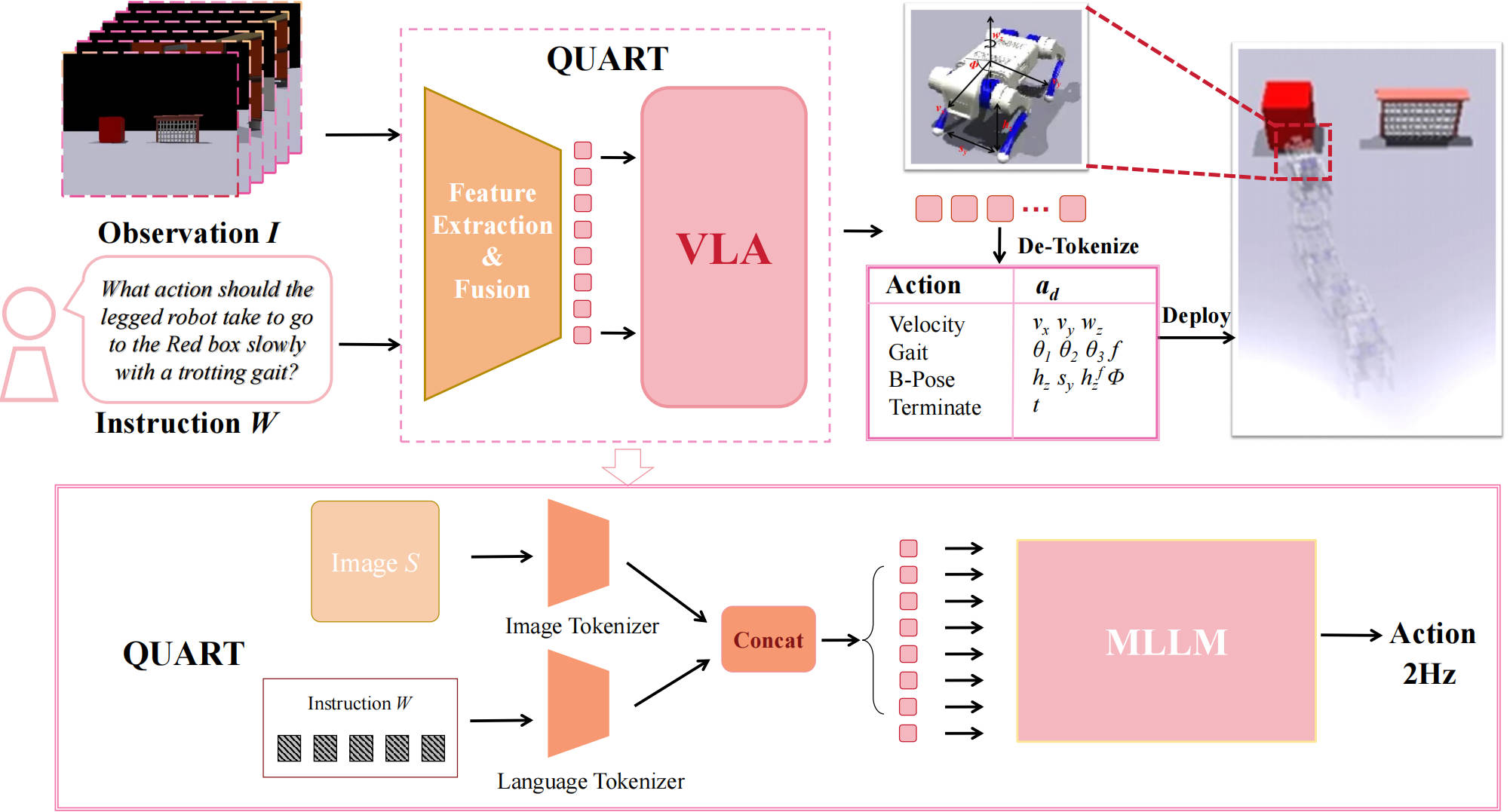

QUAR-VLA: Vision-Language-Action Model for Quadruped Robots

Pengxiang Ding, Han Zhao, Wenxuan Song, Wenjie Zhang, Min Zhang, Siteng Huang, Ningxi Yang, Donglin Wang

💬 Invited Talks

- Invited talks at the Pine Lab, Nanyang Technological University (group led by Prof. Ziwei Wang),

AIR, Tsinghua University (group led by Prof. Yan Wang),

Auto Lab, Shanghai Jiao Tong University (group led by Prof. Zhipeng Zhang),

Zhejiang University (group led by Prof. Chunhua Shen),

The Hong Kong University of Science and Technology (Guangzhou) (group led by Prof. Jia Li),

and the BAAI Innovation Center, among others.